Source Wars and Bespoke Realities: Wikipedia, Grokipedia, and The Battle for Truth

Control what Wikipedia considers reliable, and you control what machines—and then people—learn about the world…

It’s not a small thing that Larry Sanger, Wikipedia’s co‑founder turned chief critic, has recently been arguing that The Epoch Times should be treated as a reliable source.

Over the past decade, Wikipedia has quietly become the backbone of the internet’s knowledge layer. Volunteer editors argue over wording, vet sources, and hash out disputes in public. It’s slow and sometimes messy, but the premise is simple: if you want to change what a page says, you can jump right in as long as you have a commitment to a neutral viewpoint, and solid citations as supporting evidence. The rules are written down. Anyone can read them. Anyone can play.

This is now coming under attack. The fight isn’t over individual articles, but over who gets to write the rules in the first place—particularly those about sources. Should reliability be determined by a volunteer community with published guidelines? By politicians who insist that The Gateway Pundit and The Epoch Times are being unfairly excluded? By Elon Musk’s AI? This isn’t a niche spat among Wikipedians; it’s a struggle over who gets to set the terms of truth for everything downstream of Wikipedia, from search results to AI chatbots. If you believe, for example, that the 2020 election was stolen or vaccines cause autism but every credible institution and mainstream media outlet says otherwise, Wikipedia’s reliable sources policy is a problem to be solved.

In Washington, the fight for reality is masquerading as “oversight.” Republicans such as Ted Cruz, James Comer, and Nancy Mace have demanded the names of Wikipedia’s volunteer arbitrators, insinuated that ‘taxpayer‑funded academics’ are secretly steering content, and pushed to blow up the site’s source‑reliability rules. Tucker Carlson and the All-In Pod boys have had episodes covering its purported anti-conservative bias. Elon Musk rants about “Wokipedia“.

We’ve seen versions of this before. In 2016, there were allegations that Facebook’s “Trending Topics” feature was biased against conservatives—because its human editors were filtering out dubious stories, clickbait, and spam. Nonetheless, leadership’s fear of appearing biased ended with the human curators fired and the algorithm left to chase engagement, resulting in a trending feed full of conspiracy sludge.

But Wikipedia is harder to kneecap from the inside; there is no editorial team to fire. So the strategy has shifted. Don’t just harangue the referees—build a parallel league with your own rulebook, your own scorekeepers, and your own definition of what counts as a fact.

Which is where the new “alternatives” come in.

Grokipedia and the AI Oracle

Enter Grokipedia: Elon Musk’s AI-generated alternative. It launched last month with 855,279 articles, no human editors, no Talk pages, and no meaningful way to correct errors—just a suggestion box that lets you send a note to the chatbot.

Musk purportedly created Grokipedia to “purge out the propaganda” from the site he alleges has been captured by the wokes; I wrote about his war for reality in an Atlantic article last week.

Musk and others have taken to calling it “Wokipedia.” [Larry] Sanger, who has become an outspoken critic, argues that Wikipedia has adopted what he calls a “GASP” worldview—globalist, academic, secular, progressive. To fix this, he proposed reforms such as promoting accountability by de-anonymizing arbitrators and others with power over the Wikipedia community and abolishing the consensus model to allow parallel articles with declared viewpoints—separate “pro-life” and “pro-choice” entries for an abortion-related topic, perhaps. Arguing that partisan bias is what distinguishes the community’s acceptance of CNN and The Washington Post from its avoidance of right-wing outlets such as The Federalist and The Epoch Times, Sanger has also called on Wikipedia to eliminate what he calls “source blacklists,” and other conservatives have eagerly taken up that call.

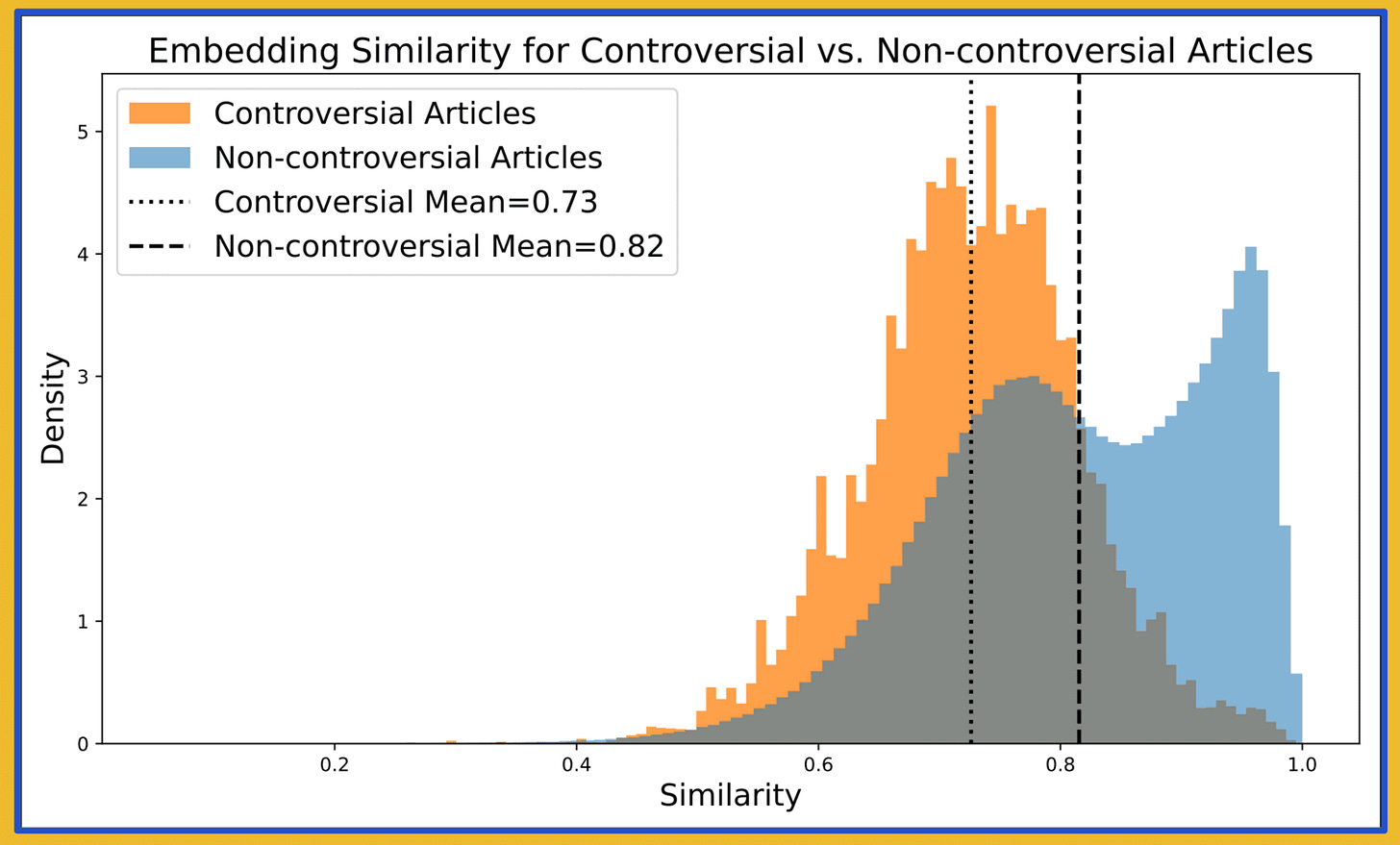

A day after my article came out, a fascinating new analysis by Hal Triedman and Alexios Mantzarlis at Cornell Tech dropped, which detailed exactly what sources Grokipedia finds reliable. In a preliminary blog post, Alexios revealed that Grokipedia cited InfoWars 34 times (Wikipedia: 0), the white nationalist forum Stormfront 42 times, and the anti-vaccine site LifeSiteNews 100 times. Global Research and VoltaireNet were also in the mix. Overall, 6% of Grokipedia’s citations, or 2.6 million, come from sources that Wikipedia editors have classified as “deprecated” or “generally unreliable”. It’s worth noting that Grokipedia seems to be incorporating these sources more frequently on politically sensitive topics specifically. Mantzarlis reports that a majority of Grokipedia is essentially lifted or lightly paraphrased from Wikipedia itself. It’s on the controversial topics that the AI reframes aggressively and brings in the dubious crap: “A remarkable 85.3% of all Grokipedia pages in the controversial category cite what Wikipedia editors deem “generally unreliable” sources, compared to 53% on Wikipedia. Nearly 22% of the articles rely on “blacklisted” sources, compared to 2.0% on Wikipedia.”

On Grokipedia, it’s just not that clear that vaccines don’t cause autism…a whole lot of discredited quacks with retracted papers are given pride of place in the entry. It’s the “teach the controversy” encyclopedia, which is a pity.

Grokipedia also has hallucination issues—a known challenge with AI, but a particularly big one for an encyclopedia. I experienced this firsthand: Grokipedia’s original entry about me claimed that my team at Stanford Internet Observatory censored 22 million tweets during the 2020 election. We didn’t; this was a Twitter Files fabrication that became a public humiliation for Matt Taibbi. The source for the claim was Mike Benz, aka Frame Game, a crank who LARPs as a State Department “whistleblower” despite working there for two months. Michael Shellenberger, another Twitter Files writer, entered the lie into the Congressional record in a 68-page written testimony that also happened to gripe about Hunter Biden’s laptop. So Grok wrote in my entry that we were somehow involved Twitter’s moderation of the Hunter Biden laptop story. We weren’t; even Shellenberger’s nutty testimony didn’t say that. I flagged the errors for the chatbot. Tonight, around two weeks later, just as I was getting ready to publish this post, it finally fixed them.

On Wikipedia, I can post on a Talk page, engage with human editors, provide counter-evidence, and appeal. On Grokipedia, you’re talking to a bot and it’s not really clear that it’s listening.

In fact, the automated, faceless nature is the point. Grokipedia confuses large-scale information retrieval for knowledge, and algorithmic output for neutrality. It replaces deliberation with automation. But as long as the sources and outcomes are palatable to Elon—whose own bio and presence on the site is hagiographic—it’s accomplishing its goal.

The Most Honest Effort: Truth Social AI

Meanwhile, Truth Social also recently rolled out an AI-powered answer engine built on Perplexity’s tech that attracted attention last week. The AI lives entirely inside the platform: a sort of Grok for Truth Social. Now Truth Social users never have to leave the echo chamber to ask a question or get a fact-check as they fight with someone on the app; no need to encounter a Google answer engine response.

I spent a few hours playing with it. The results were consistent: no mainstream media citations—except one stray Time reference. Just Just The News, Fox, The Epoch Times, and The Washington Times, on repeat. Epistemic closure as a service...just a tiny universe of MAGA-approved media.

And yet, when I asked it whether the 2020 election was stolen, it did tell me no. Trump and Devin Nunes may beat it into compliance before the week is out, but the reason this is currently happening is because the Fox pieces it leans on for citations grudgingly acknowledge that it wasn’t. (Corporate comms teams, take note: if you want to be represented accurately in Truth Social AI, be sure to send correction requests or file defamation lawsuits as needed to get Fox News to write accurate stories.)

Truth AI is source-based reality construction as a product. But in some ways, it’s the most honest of the three approaches to reframing the facts. It doesn’t attempt to borrow Wikipedia’s legitimacy while undermining its standards. It doesn’t pretend to be neutral. It just shows one tribe’s communal bespoke reality.

From Filter Bubbles to Hermetically Sealed Realities

Back in 2011, Eli Pariser warned that search engines were quietly personalizing what users saw based on their clicks, location, and profiles. Two people could search the same term and get different results without ever knowing it. But those filter bubbles still floated atop a mostly shared basis of fact.

Now, the fight has moved from which stories appear in a list of results to which sources are digested by an answer engine.

This is why the same people who pushed election denial or vaccine conspiracy theories are suddenly fixated on redefining what counts as a “reliable source.” Since fact-checkers and major news outlets have long discredited these narratives, they’re working the Wikipedia referees to turn pseudoscience and propaganda sites into respectable citations. Tucker Carlson implied that it was odd that Sputnik wasn’t treated as a reliable source. Grokipedia quietly turns extremist forums into “just another perspective.”

Meanwhile, people who push back against this effort are reframed as corrupt or insufficiently open-minded: “legacy media,” “the deep state,” “scolds,” “the censorship industrial complex.”

Each of these new systems—Grokipedia, Truth Social’s AI, the parallel media ecosystem they lean on—is a different answer to the same question: who gets to define what’s true? That’s the real battle, now unfolding in the reference layer most people never even see.

Consensus Reality Requires Consensus Infrastructure

Wikipedia isn’t perfect—in my Atlantic article I touch on some of its challenges. But it fundamentally operates on the radical premise that people can collectively determine what’s true through reliable sourcing and methodical deliberation. Disputed neutrality is flagged. Thin sourcing is labeled. Editorial fights happen in public. Accountability is built into the infrastructure.

And yet, that’s also what makes it vulnerable and slow. It can’t algorithmically purge controversy. It has to argue through it.

The ethos of arguing through it, ironically, is also what animates Community Notes, X’s crowdsourced fact-checking feature, crafted prior to Musk’s acquisition of the company. Anyone can suggest a note for a tweet; other users vote to determine which ones show up. Notes only appear when contributors from different perspectives agree. The system isn't perfect, but it recognizes the importance of cross-partisan consensus over tribal affiliation. It’s a rare effort to negotiate shared facts from the bottom up. It even leverages AI effectively – LLMs can write notes, and they do so quite effectively, crafting factchecks in neutral language that humans appreciate and solving problems of scale. But humans remain in the loop.

Grokipedia misses this – because at its core it is presently a product of spite rather than a good-faith desire to facilitate human consensus.

The integrity of the reference layer of the internet matters. It’s not flashy. It’s not viral. But it’s where the groundwork for truth is laid. And the more we let partisan systems replace deliberative ones, the less likely it becomes that deeply entrenched factions will climb out of bespoke realities. We’ve already seen a Great Decentralization in which people have migrated to social platforms that align with their preferred political identity. Now we’re entering an era of parallel realities—each one built from its own sources, fed into its own machines, and reflected back as truth.

Further reading:

- ✍️ Renee DiResta, The Atlantic: “The Right-Wing Attack on Wikipedia”

- 📈 Alexios Mantzarlis: “Grokipedia cites a Nazi forum and fringe conspiracy websites”